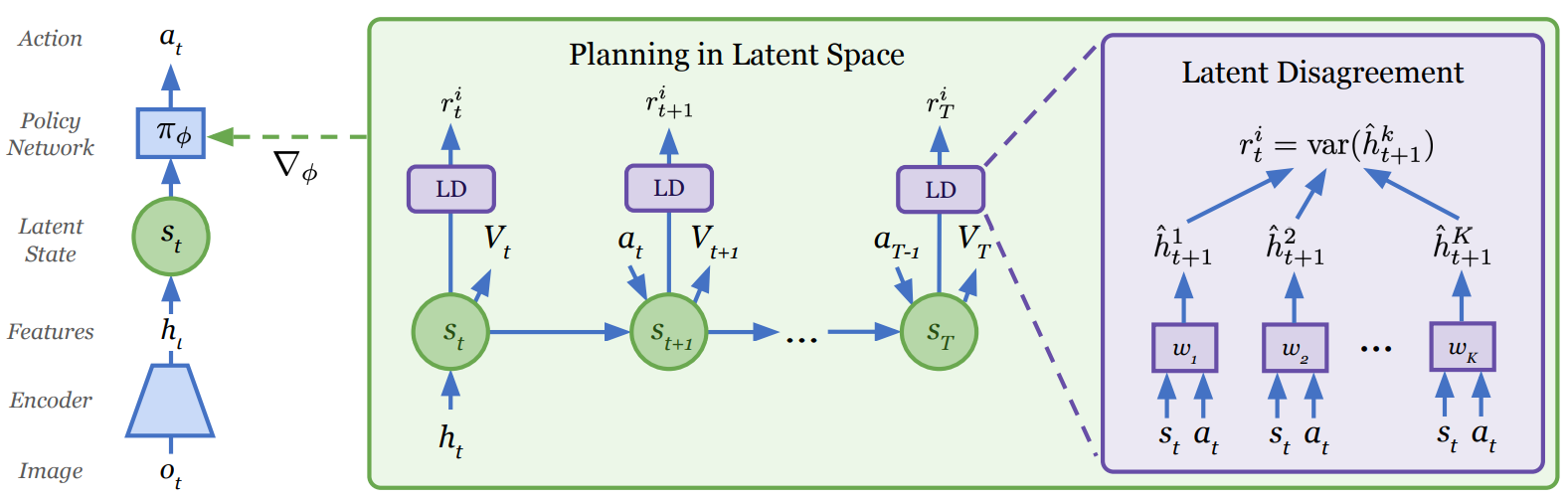

Reinforcement learning allows solving complex tasks, however, the learning tends to be task-specific and the sample efficiency remains a challenge. We present Plan2Explore, a self-supervised reinforcement learning agent that tackles both these challenges through a new approach to self-supervised exploration and fast adaptation to new tasks, which need not be known during exploration. During exploration, unlike prior methods which retrospectively compute the novelty of observations after the agent has already reached them, our agent acts efficiently by leveraging planning to seek out expected future novelty. After exploration, the agent quickly adapts to multiple downstream tasks in a zero or a few-shot manner. We evaluate challenging control tasks from high-dimensional image inputs. Without any training supervision or task-specific interaction, Plan2Explore outperforms prior self-supervised exploration methods, and in fact, almost matches the performance oracle which has access to rewards.

URL: arxiv.org/abs/2005.05960

Topic: Latent Dynamics

Video: www.youtube.com/watch?v=IiBFqnNu7A8

Conference: ICML 2020

'Deep Learning > 강화학습' 카테고리의 다른 글

| [2018.02] Diversity is all you need: Learning skills without a reward function (0) | 2021.05.08 |

|---|---|

| [2019.03] Model-Based Reinforcement Learning for Atari (0) | 2021.05.07 |

| [2018.08] SOLAR: Deep Structured Representations for Model-Based Reinforcement Learning (0) | 2021.05.01 |

| [2017.05] Constrained Policy Optimization (0) | 2021.04.25 |

| [2018.03] Policy Optimization with Demonstration (0) | 2021.04.23 |