When it comes to NAS(Neural Architecture Search), obtaining a state-of-the-art architecture requires 2000 GPU days of reinforcement learning (RL) (Zoph et al., 2018) or 3150 GPU days of evolution (Real et al., 2018). An inherent cause of inefficiency is the fact that architecture search is treated as a black-box optimization problem over a discrete domain, which leads to a large number of architecture evaluations required.

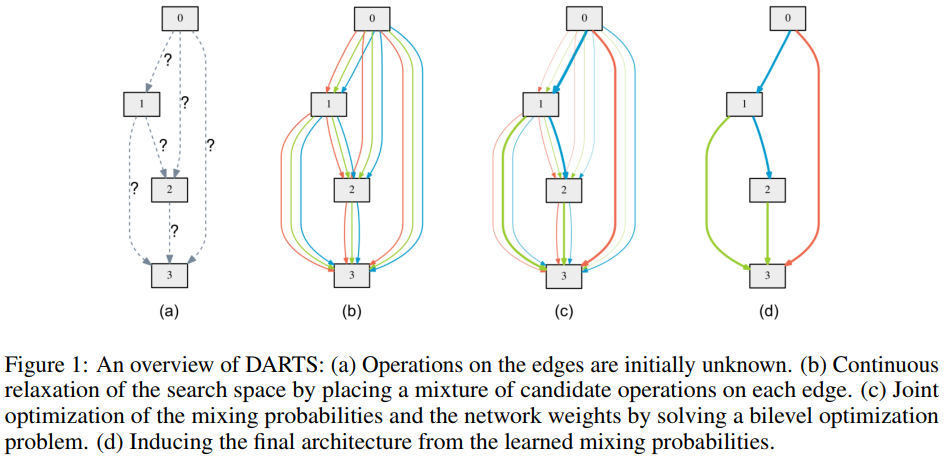

In this work, instead of searching over a discrete set of candidate architectures, we relax the search space to be continuous, so that the architecture can be optimized with respect to its validation set performance by gradient descent. Notably, DARTS is simpler than many existing approaches as it does not involve controllers (Zoph & Le, 2017 Baker et al., 2017; Zoph et al., 2018; Pham et al., 2018b; Zhong et al., 2018), hypernetworks (Brock et al., 2018) or performance predictors (Liu et al., 2018a), yet it is generic enough handle both convolutional and recurrent architectures.

In our experiments (Sect. 3) we show that DARTS is able to design a convolutional cell that achieves 2.76 ± 0.09% test error on CIFAR-10 for image classification using 3.3M parameters, which is competitive with the state-of-the-art result by regularized evolution (Real et al., 2018) obtained using three orders of magnitude more computation resources.

URL: https://arxiv.org/abs/1806.09055

2. Reference

Introduction to AutoML and NAS

DARTS

DARTS:mathematic

DARTS:tutorial

DARTS: multi-gpu extension

http://openresearch.ai/t/darts-differentiable-architecture-search/355