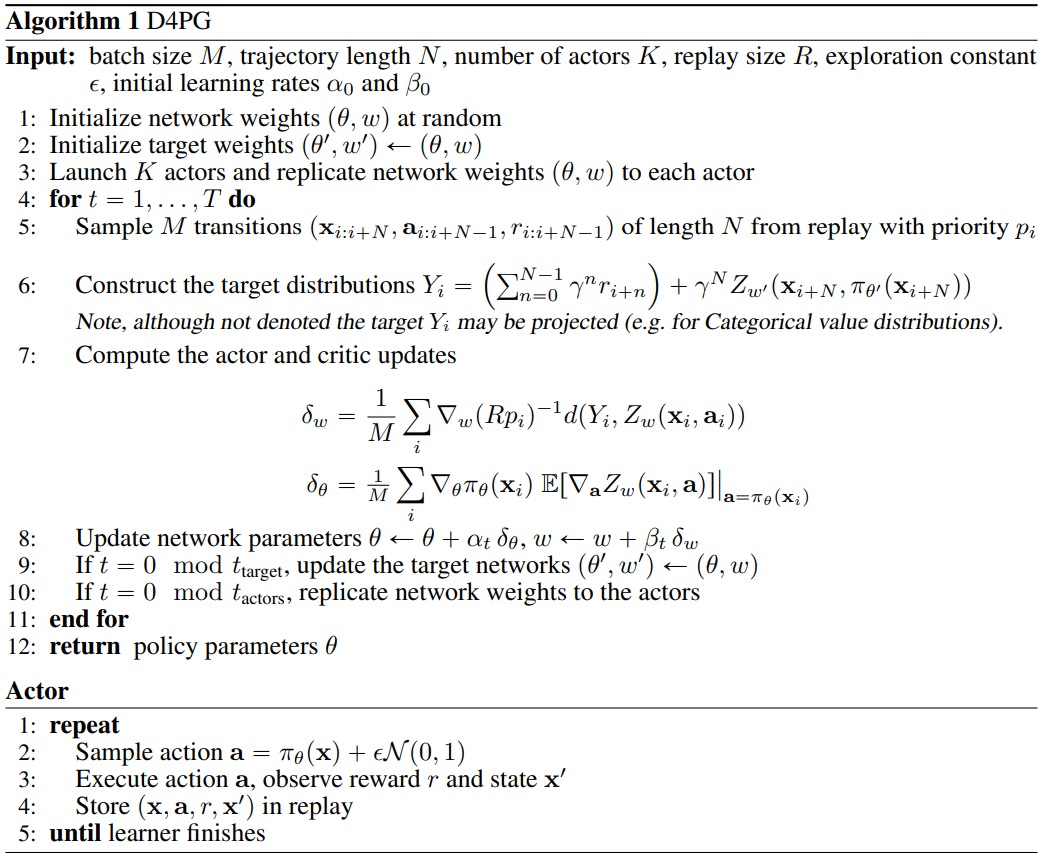

This work adopts the very successful distributional perspective on reinforcement learning and adapts it to the continuous control setting. We combine this within a distributed framework for off-policy learning in order to develop what we call the Distributed Distributional Deep Deterministic Policy Gradient algorithm, D4PG. We also combine this technique with a number of additional, simple improvements such as the use of N-step returns and prioritized experience replay. Our results show that across a wide variety of simple control tasks, difficult manipulation tasks, and a set of hard obstacle-based locomotion tasks the D4PG algorithm achieves state-of-the-art performance.

URL: arxiv.org/abs/1804.08617

Topic: Distributional RL

1. Introduction

In this work, we consider a number of modifications to the Deep Deterministic Policy Gradient (DDPG) algorithm (Lillicrap et al., 2015), an off-policy actor-critic method. In particular, the policy gradient used to update the actor-network depends only on a learned critic. This means that any improvements to the critic learning procedure will directly improve the quality of the actor updates. In this work, we utilize a distributional (Bellemare et al., 2017) version of the critic update which provides a better, more stable learning signal. Such distributions model the randomness due to intrinsic factors, among these is the inherent uncertainty imposed by function approximation in a continuous environment. We will see that using this distributional update directly results in better gradients and hence improves the performance of the learning algorithm.

Due to the fact that DDPG is capable of learning off-policy, it is also possible to modify the way in which experience is gathered. In this work we utilize this fact to run many actors in parallel, all feeding into a single replay table. This allows us to seamlessly distribute the task of gathering experience, which we implement using the ApeX framework (Horgan et al., 2018). This results in significant savings in terms of wall-clock time for difficult control tasks. We will also introduce a number of small improvements to the DDPG algorithm.

1.1. Related Works

Historically, estimation of the policy gradient has relied on the likelihood ratio trick (see e.g. Glynn, 1990), more commonly known as REINFORCE (Williams, 1992). Modern variants of these so-called “vanilla” policy gradient methods include the work of (Mnih et al., 2016). Alternatively, one can consider second-order or “natural” variants of this objective, a set of techniques that include e.g. the Natural Actor-Critic (Peters & Schaal, 2008) and Trust Region Policy Optimization (TRPO) (Schulman et al., 2015) algorithms. More recently Proximal Policy Optimization (PPO) (Schulman et al., 2017), which can be seen as an approximation of TRPO, has proven very effective in large-scale distributed settings. Often, however, algorithms of this form are restricted to learning on-policy, which can limit both the amount of data reuse as well as restrict the types of policies that are used for exploration.

The Deterministic Policy Gradient (DPG) algorithm (Silver et al., 2014) upon which this work is based starts from a different set of ideas, namely the policy gradient theorem of (Sutton et al., 2000). The deterministic policy gradient theorem builds upon this earlier approach but replaces the stochastic policy with one that includes no randomness. This approach is particularly important because it had previously been believed that the deterministic policy gradient did not exist in a model-free setting. DPG was later built upon by Lillicrap et al. (2015) who extended this algorithm and made use of a deep neural network as the function approximator, primarily as a mechanism for extending these results to work with vision-based inputs. Further, this entire endeavor lends itself very readily to an off-policy actor-critic architecture such that the actor’s gradients depend only on derivatives through the learned critic. This means that by improving the estimation of the critic one is directly able to improve the actor gradients. Most interestingly, there have also been recent attempts to distribute updates for the DDPG algorithm, (e.g. Popov et al., 2017), and more generally in this work, we build on the work of (Horgan et al., 2018) for implementing distributed actors.

1.2. Backgrounds

In this standard setup, the agent's behavior is controlled by a policy $\pi: \mathcal{X} \rightarrow \mathcal{A}$ which maps each observation to an continous action. The state-action value function, or Q-function, and is commonly used to evaluate the quality of a policy. While it is possible to derive an updated policy directly from $Q_{\pi}$, such an approach typically requires maximizing this function with respect to $\mathbf{a}$ and is made complicated by the continuous action space. Instead we will consider a parameterized policy $\pi_{\theta}$ and maximize the expected value of this policy by optimizing $J(\theta)=\mathbb{E}\left[Q_{\pi_{\theta}}\left(\mathbf{x}, \pi_{\theta}(\mathbf{x})\right)\right] .$ By making use of the deterministic policy gradient theorem (Silver et al., 2014 ) one can write the gradient of this objective as,

$\nabla_{\theta} J(\theta) \approx \mathbb{E}_{\rho}\left[\left.\nabla_{\theta} \pi_{\theta}(\mathbf{x}) \nabla_{\mathbf{a}} Q_{\pi_{\theta}}(\mathbf{x}, \mathbf{a})\right|_{\mathbf{a}=\pi_{\theta}(\mathbf{x})}\right] \quad (2)$

where $\rho$ is the state-visitation distribution associated with some behavior policy. Note that by letting the behavior policy differ from $\pi$ we are able to empirically evaluate this gradient using data gathered off-policy. While the exact gradient given by (2) assumes access to the true value function of the current policy, we can instead approximate this quantity with a parameterized critic $Q_{w}(\mathbf{x}, \mathbf{a})$. By introducing the Bellman operator,

$\left(\mathcal{T}_{\pi}, Q\right)(\mathbf{x}, \mathbf{a})=r(\mathbf{x}, \mathbf{a})+\gamma \mathbb{E}\left[Q\left(\mathbf{x}^{\prime}, \pi\left(\mathbf{x}^{\prime}\right)\right) \mid \mathbf{x}, \mathbf{a}\right]$

whose expectation is taken with respect to the next state $\mathrm{x}^{\prime}$, we can minimize the temporal difference (TD) error, i.e. the difference between the value function before and after applying the Bellman update. Typic ally the TD error will be evaluated under separate target policy and value networks. i.e. networks with separate parameters $\left(\theta^{\prime}, w^{\prime}\right)$, in order to stabilize learning. By taking the two-norm of this error we can write the resulting loss as,

$L(w)=\mathbb{E}_{\rho}\left[\left(Q_{w}(\mathbf{x}, \mathbf{a})-\left(\mathcal{T}_{\pi_{\theta^{\prime}}} Q_{w^{\prime}}\right)(\mathbf{x}, \mathbf{a})\right)^{2}\right]$

In practice, we will periodically replace the target networks with copies of the current network weights. Finally, by training a neural network policy using the deterministic policy gradient in (2) and training a deep neural to minimize the TD error in (4) we obtain the Deep Deterministic Policy Gradient (DDPG) algorithm (Lillicrap et al., 2016). Here a sample-based approximation to these gradients is employed by using data gathered in some replay table.

2. Distributed Distrubtional DDPG

The approach taken in this work starts from the DDPG algorithm and includes a number of enhancements. These extensions, which we will detail in this section, include a distributional critic update, the use of distributed parallel actors, N-step returns, and prioritization of the experience replay.