In offline reinforcement learning (RL), the goal is to learn a highly rewarding policy based solely on a dataset of historical interactions with the environment. The ability to train RL policies offline would greatly expand where RL can be applied, its data efficiency, and its experimental velocity. Prior work in offline RL has been confined almost exclusively to model-free RL approaches. In this work, we present MOReL, an algorithmic framework for model-based offline RL. This framework consists of two steps: (a) learning a pessimistic MDP (P-MDP) using the offline dataset; (b) learning a near-optimal policy in this P-MDP. The learned P-MDP has the property that for any policy, the performance in the real environment is approximately lower-bounded by the performance in the P-MDP. This enables it to serve as a good surrogate for purposes of policy evaluation and learning, and overcome common pitfalls of model-based RL like model exploitation. Theoretically, we show that MOReL is minimax optimal (up to log factors) for offline RL.

URL: arxiv.org/pdf/2005.05951.pdf

Topic: Offline RL

1. Introduction

Due to the use of a static dataset, offline RL faces unique challenges. Over the course of learning, the agent has to evaluate and reason about various candidate policy updates. This offline policy evaluation is particularly challenging due to the deviation between the state visitation distribution of the candidate policy and the logging policy. Furthermore, this difficulty is exacerbated over the course of learning as the candidate policies increasingly deviate from the logging policy. This change in distribution, as a result of policy updates, is typically called a distribution shift and constitutes a major challenge in offline RL. Recent studies show that directly using off-policy RL algorithms with an offline dataset yields poor results due to distribution shift and function approximation errors [15, 16, 17]. To overcome this, prior works have proposed modifications like Q-network ensembles [15, 18] and regularization towards the data logging policy [19, 16, 18]. Most notably, prior work in offline RL has been confined almost exclusively to model-free methods [20, 15, 16, 19, 17, 18, 21].

Model-based RL (MBRL) presents an alternate set of approaches involving the learning of approximate dynamics models which can subsequently be used for policy search. MBRL enables the use of generic priors like smoothness and physics [22] for model learning, and a wide variety of planning algorithms [23, 24, 25, 26, 27]. As a result, MBRL algorithms have been highly sample efficient for online RL [28, 29]. However, direct use of MBRL algorithms with offline datasets can prove challenging, again due to the distribution shift issue. In particular, since the dataset may not span the entire state-action space, the learned model is unlikely to be globally accurate. As a result, planning using a learned model without any safeguards against model inaccuracy can result in “model exploitation” [30, 31, 29, 28], yielding poor results [32]. In this context, we study the pertinent question of how to effectively regularize and adapt model-based methods for offline RL.

The principal contribution of our work is the development of MOReL (Model-based Offline Reinforcement Learning), a novel model-based framework for offline RL (see figure 1 for an overview). MOReL consists of two modular steps: (a) learning a pessimistic MDP (P-MDP) using the offline dataset, and (b) learning a near-optimal policy for the P-MDP. For any policy, the performance in the true MDP (environment) is approximately lower bounded by the performance in the P-MDP, making it a suitable surrogate for purposes of policy evaluation and learning. This also guards against model exploitation, which often plagues MBRL. The P-MDP partitions the state space into “known” and “unknown” regions, and uses a large negative reward for unknown regions. This provides a regularizing effect during policy learning by heavily penalizing policies that visit unknown states. Such a regularization in the space of state visitations, afforded by a model-based approach, is particularly well suited for offline RL. In contrast, model-free algorithms [16, 18] are forced to regularize the policies directed towards the data logging policy, which can be overly conservative. Theoretically, we establish upper bounds for the sub-optimality of a policy learned with MOReL and a lower bound for the sub-optimality of a policy learnable by any offline RL algorithm. We find that these bounds match up to log factors, suggesting that MOReL is nearly minimax optimal.

2. MOReL

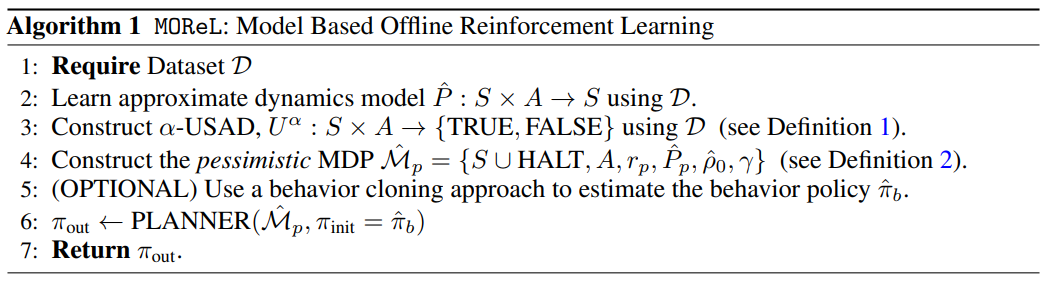

For ease of exposition and clarity, we first begin by presenting an idealized version of MOReL, for which we also establish theoretical guarantees. Subsequently, we describe a practical version of MOReL that we use in our experiments. Algorithm 1 presents the broad framework of MOReL. We now study each component of MOReL in greater detail.

Learning the dynamics model: The first step involves using the offline dataset to learn an approximate dynamics model $\hat{P}(\cdot \mid s, a)$. This can be achieved through maximum likelihood estimation or other techniques from generative and dynamics modeling [61, 62, 63]. Since the offline dataset may not span the entire state space, the learned model may not be globally accurate. So, a naive MBRL approach that directly plans with the learned model may over-estimate rewards in unfamiliar parts of the state space, resulting in a highly sub-optimal policy [32]. We overcome this with the next step.

Unknown state-action detector (USAD): We partition the state-action space into known and unknown regions based on the accuracy of the learned model as follows.

Definition 1. (α-USAD) Given a state-action pair (s, a), define an unknown state action detector as:

$U^{\alpha}(s, a)=\left\{\begin{array}{ll}\text { FALSE (i.e. Known) } & \text { if } D_{T V}(\hat{P}(\cdot \mid s, a), P(\cdot \mid s, a)) \leq \alpha \text { can be guaranteed } \\ \text { TRUE (i.e. Unknown) } & \text { otherwise }\end{array}\right.$

Here $D_{T V}(\hat{P}(\cdot \mid s, a), P(\cdot \mid s, a))$ denotes the total variation distance between $\hat{P}(\cdot \mid s, a)$ and $P(\cdot \mid s, a)$. Intuitively, USAD provides confidence about where the learned model is accurate. It flags state actions for which the model is guaranteed to be accurate as "known" while flagging state-actions where such a guarantee cannot be ascertained as "unknown". Note that USAD is based on the ability to guarantee accuracy, and is not an inherent property of the model. In other words, there could be states where the model is actually accurate but flagged as unknown due to the agent's inability to guarantee accuracy. Two factors contribute to USAD's effectiveness: (a) data availability: having sufficient data points "close" to the query; (b) quality of representations: certain representations, like those based on physics, can lead to better generalization guarantees. This suggests that larger datasets and research in representation learning can potentially enable stronger offline RL results.

Pessimistic MDP construction: We now construct a pessimistic MDP (P-MDP) using the learned model and USAD, which penalizes policies that venture into unknown parts of state-action space.

Definition 2. The $(\alpha, \kappa)$ -pessimistic MDP is described by $\hat{\mathcal{M}}_{p}:=\left\{S \cup H A L T, A, r_{p}, \hat{P}_{p}, \hat{\rho}_{0}, \gamma\right\}$. Here, $S$ and $A$ are states and actions in the MDP M. HALT is an additional absorbing state we introduce into the state space of $\mathcal{M}_{p} . \hat{\rho}_{0}$ is the initial state distribution learned from the dataset $\mathcal{D}$. $\gamma$ is the discount factor (same as M). The modified reward and transition dynamics are given by:

$\hat{P}_{p}\left(s^{\prime} \mid s, a\right)=\left\{\begin{array}{ll}\delta\left(s^{\prime}=\mathrm{HALT}\right) & \text { if } U^{\alpha}(s, a)=\mathrm{TRUE} \\ \hat{P}\left(s^{\prime} \mid s, a\right) & \text { or } s=\mathrm{HALT} \\ \text { otherwise }\end{array} \quad r_{p}(s, a)=\left\{\begin{array}{ll}-\kappa & \text { if } s=\mathrm{HALT} \\ r(s, a) & \text { otherwise }\end{array}\right.\right.$

$\delta\left(s^{\prime}=\mathrm{HALT}\right)$ is the Dirac delta function, which forces the MDP to transition to the absorbing state HALT. For unknown state-action pairs, we use a reward of $-\kappa$, while all known state-actions receive the same reward as in the environment. The P-MDP heavily punishes policies that visit unknown states, thereby providing a safeguard against distribution shift and model exploitation.

Planning: The final step in MOReL is to perform planning in the P-MDP defined above. For simplicity, we assume a planning oracle that returns an $\epsilon_{\pi}$ -sub-optimal policy in the P-MDP. A number of algorithms based on MPC [23, 64], search-based planning [65, 25], dynamic programming [49, 26], or policy optimization $[27,51,66,67]$ can be used to approximately realize this.