In this work, we explore how prior tasks can inform an agent about how to explore effectively in new situations. We introduce a novel gradient-based fast adaptation algorithm – model agnostic exploration with structured noise (MAESN) – to learn exploration strategies from prior experience. The prior experience is used both to initialize a policy and to acquire a latent exploration space that can inject structured stochasticity into a policy, producing exploration strategies that are informed by prior knowledge and are more effective than random action-space noise. We show that MAESN is more effective at learning exploration strategies when compared to prior meta-RL methods, RL without learned exploration strategies, and task-agnostic exploration methods. We evaluate our method on a variety of simulated tasks: locomotion with a wheeled robot, locomotion with a quadrupedal walker, and object manipulation.

URL: arxiv.org/pdf/1802.07245.pdf

Topic: Meta-RL

1. Introduction

While meta-learning has been shown to be effective for fast adaptation on several RL problems [6, 5], the prior methods generally focus on tasks where exploration is trivial where such adaptation regimes differ substantially from stochastic exploration. Tasks where discovering the goal requires exploration that is both stochastic and structured cannot be easily captured by such methods, as demonstrated in our experiments. Specifically, there are two major shortcomings with these methods: (1) The stochasticity of the policy is limited to time-invariant noise from action distributions, which fundamentally limits the exploratory behavior it can represent. (2) For RNN based methods, the policy is limited in its ability to adapt to new environments, since adaptation is performed with a forward pass of the recurrent network. If this single forward pass does not produce good behavior, there is no further mechanism for improvement. Methods that adapt by gradient descent, such as MAML, simply revert to standard policy gradient and can make slow but steady improvement in the worst case, but do not address (1).

2. Preliminaries

In meta-RL, we consider a distribution $\tau_{i} \sim p(\tau)$ over tasks, where each task $\tau_{i}$ is a different Markov decision process (MDP) $M_{i}=\left(S, A, P_{i}, R_{i}\right)$, with state-space $S$, action space $A$, transition distribution $P_{i}$, and reward function $R_{i}$. The reward function and transitions vary across tasks. Meta-RL aims to learn a policy that can adapt to maximize the expected reward for novel tasks from $p(\tau)$ as efficiently as possible. We build on the gradient-based meta-learning framework of MAML [6], which trains a model in such a way that it can adapt quickly with standard gradient descent, which in RL corresponds to the policy gradient. The meta-training objective for MAML can be written as,

$\max _{\theta} \sum_{\tau_{i}} \mathbb{E}_{\pi_{\theta_{i}^{\prime}}}\left[\sum_{t} R_{i}\left(s_{t}\right)\right] \quad \theta_{i}^{\prime}=\theta+\alpha \mathbb{E}_{\pi_{\theta}}\left[\sum_{t} R_{i}\left(s_{t}\right) \nabla_{\theta} \log \pi_{\theta}\left(a_{t} \mid s_{t}\right)\right] \quad (1)$

The intuition behind this optimization objective is that, since the policy will be adapted at meta-test time using the policy gradient, we can optimize the policy parameters so that one step of policy gradient improves its performance on any meta-training task as much as possible. Since MAML reverts to conventional policy gradient when faced with out-of-distribution tasks, it provides a natural starting point for us to consider the design of a meta-exploration algorithm: by starting with a method that is essentially on par with task-agnostic RL methods that learn from scratch in the worst case, we can improve on it to incorporate the ability to acquire stochastic exploration strategies from experience, while preserving asymptotic performance.

3. Model Agnostic Exploration with Structured Noise

In this section, we introduce a novel method for learning structured exploration behavior based on gradient-based meta-learning. Our algorithm, which we call model agnostic exploration with structured noise (MAESN), combines structured stochasticity with MAML. MAESN is a gradient-based meta-learning algorithm that introduces stochasticity not just by perturbing the actions, but also through a learned latent space that allows exploration to be time-correlated. Both the policy and the latent space are trained with meta-learning to explicitly provide for fast adaptation to new tasks. When solving new tasks at meta-test time, a different sample is generated from this latent space for each episode (and kept fixed throughout the episode), providing structured and temporally correlated stochasticity. Because of meta-training, the distribution over latent variables is adapted to the task quickly via policy gradient updates. We first show how structured stochasticity can be introduced through latent spaces, and then describe how both the policy and the latent space can be meta-trained to form our overall algorithm.

3.1. Policies with Latent State

Typical stochastic policies parameterize action distributions $\pi_{\theta}(a \mid s)$ in a way that is independent for each time step. This representation has no notion of temporally coherent randomness throughout the trajectory since stochasticity is added independently at each step where additive noise is sampled independently for every time step. This limits the range of possible exploration strategies since the policy essentially "changes its mind" about what it wants to explore at each time step. The distribution $\pi_{\theta}(a \mid s)$ is also typically represented with simple parametric families, such as unimodal Gaussians, which restrict its expressivity. To incorporate temporally coherent exploration, we can condition the policy on per-episode random variables drawn from a learned latent distribution. Since these latent variables are sampled only once per episode, they provide temporally coherent stochasticity. The resulting policies can be written as $\pi_{\theta}(a \mid s, z)$, where $z \sim q_{\omega}(z)$, and $q_{\omega}(z)$ is the latent variable distribution with parameters $\omega$. For example, in our experiments we consider diagonal Gaussian distributions of the form $q_{\omega}(z)=\mathcal{N}(\mu, \sigma)$, such that $\omega=\{\mu, \sigma\}$. Structured stochasticity of this form can provide more coherent exploration, by sampling entire behaviors or goals, rather than simply relying on independent random actions. To achieve fast adaptation, we can incorporate meta-learning as discussed below.

3.2. Meta-Learning Latent Variable Policies

Given a latent variable conditioned policy as described above, our goal is to train it so as to capture coherent exploration strategies from a family of training tasks that enable fast adaptation to new tasks from a similar distribution. We use a combination of variational inference and gradient-based meta-learning to achieve this. To that end, we jointly learn a set of policy parameters and a set of latent space distribution parameters, such that they achieve optimal performance for each task after a policy gradient adaptation step. This procedure encourages the policy to actually make use of the latent variables for exploration. From one perspective, MAESN can be understood as augmenting MAML with a latent space to inject structured noise. From a different perspective, it amounts to learning a structured latent space, similar to [9], but trained for quick adaptation to new tasks.

To formalize the objective for meta-training, we introduce a model parameterization with policy parameters $\theta$ shared across all tasks, and per-task variational parameters $\omega_{i}$ for tasks $i=1,2 \ldots, N$, which parameterize a per-task latent distribution $q_{\omega_{i}}\left(z_{i}\right)$. We refer to $\theta, \omega_{i}$ as the pre-update parameters. Meta-training involves optimizing the pre-update parameters on a set of training tasks, learn pre-update latent parameters $\omega_{i}$, and policy parameters $\theta$, such that after a gradient step, the post-update latent parameters $\omega_{i}^{'}$, policy parameters $\theta^{'}$, are optimal for the task. As is standard in variational inference, we also add to the objective the KL-divergence between the per-task pre-update distributions $q_{\omega_{i}}\left(z_{i}\right)$ and a prior $p(z)$, which in our experiments is simply a unit Gaussian. Without this additional loss, the per-task parameters ωi can simply memorize task-specific information. The KL loss ensures that sampling z ∼ p(z) for a new task at meta-test time still produces effectively structured exploration.

For every iteration of meta-training, we sample from the latent variable conditioned policies represented by the pre-update parameters $\theta, \omega_{i}$, perform an "inner" gradient update on the variational parameters for each task (and, optionally, the policy parameters) to get the task-specific post-update parameters $\theta_{i}^{\prime}, \omega_{i}^{\prime}$, and then propagate gradients through this update to obtain a meta-gradient for $\theta$ $\omega_{0}, \omega_{1}, \ldots, \omega_{N}$ such that the sum of expected task rewards over all tasks using the post-update latentconditioned policies $\theta_{i}^{\prime}, \omega_{i}^{\prime}$ is maximized, while the KL divergence of pre-update distributions $q_{\omega_{i}}\left(z_{i}\right)$ against the prior $p\left(z_{i}\right)$ is minimized. Note that the KL-divergence loss is applied to the pre-update distributions $q_{\omega_{i}}$, not the post-update distributions, so the policy can exhibit very different behaviors on each task after the inner update. Computing the gradient of the reward under the post-update parameters requires differentiating through the inner policy gradient term, as in MAML $[6]$.

A concise description of the meta-training procedure is provided in Algorithm 1, and the computation graph representing MAESN is shown in Fig 1. The full meta-training problem can be stated mathematically as,

$\max _{\theta, \omega_{i}} \sum_{i \in \text { tasks }} E_{a_{t} \sim \pi\left(a_{t} \mid s_{t} ; \theta_{i}^{\prime}, z_{i}^{\prime}\right)} z_{z_{i}^{\prime} \sim q_{\omega_{i}^{\prime}}(.)}^{(.)}\left[\sum_{t} R_{i}\left(s_{t}\right)\right]-\sum_{i \in \text { tasks }} D_{K L}\left(q_{\omega_{i}}(.) \| p(z)\right) \quad (2)$

$\omega_{i}^{\prime}=\omega_{i}+\alpha_{\omega} \circ \nabla_{\omega_{i}} E_{a_{t} \sim \pi\left(a_{t} \mid s_{t} ; \theta, z_{i}\right)}\left[\sum_{t} R_{i}\left(s_{t}\right)\right] \quad (3)$

$\theta_{i}^{\prime}=\theta+\alpha_{\theta} \circ \nabla_{\theta} E_{a_{t} \sim \pi\left(a_{t} \mid s_{t} ; \theta, z_{i}\right)} z_{i \sim q_{\omega_{i}}(.)}\left[\sum_{t} R_{i}\left(s_{t}\right)\right] \quad (4)$

The two objective terms are the expected reward under the post-update parameters for each task and the KL-divergence between each task’s pre-update latent distribution and the prior. The α values are per-parameter step sizes, and ◦ is an elementwise product. The last update (to θ) is optional. We found that we could in fact obtain better results simply by omitting this update, which corresponds to meta-training the initial policy parameters θ simply to use the latent space efficiently, without training the parameters themselves explicitly for fast adaptation. Including the θ update makes the resulting optimization problem more challenging. MAESN enables structured exploration by using the latent variables z, while explicitly training for fast adaptation via policy gradient. We could in principle train such a model without meta-training for adaptation at all, which resembles the model proposed by [9]. However, as we will show in our experimental evaluation, meta-training produces substantially better results. Interestingly, during the course of meta-training, we find that the pre-update variational parameters $\omega_{i}$ for each task is usually close to the prior at convergence. This has a simple explanation: meta training optimizes for post-update rewards, after $\omega_{i}$ have been updated to $\omega_{i}^{\prime}$, so even if $\omega_{i}$ matches the prior, it does not match the prior after the inner update. This allows the learned policy to succeed on new tasks at meta-test time for which we do not have a good initialization for $\omega$, and have no choice but, to begin with the prior, as discussed in the next section.

3.3. Using the Latent Space for Exploration

Let us consider a new task $\tau_{i}$ with reward $R_{i}$, and a learned model with policy parameters $\theta$. The variational parameters $\omega_{i}$ are specific to the tasks used during meta-training, and will not be useful for a new task. However, since the KL-divergence loss (Eqn 3) encourages the pre-update parameters to be close to the prior, all of the variational parameters $\omega_{i}$ are driven to the prior at convergence (Fig 5a). Hence, for exploration in a new task, we can initialize the latent distribution to the prior $q_{\omega}(z)=p(z)$. In our experiments, we use the prior with $\mu=0$ and $\sigma=I$. Adaptation to a new task is then done by simply using the policy gradient to adapt $\omega$ via backpropagation on the RL objective, $\max _{\omega} E_{a_{t} \sim \pi\left(a_{t} \mid s_{t}, \theta, z\right), z \sim q_{\omega}(.)}\left[\sum_{t} R\left(s_{t}\right)\right]$ where $R$ represents the sum of rewards along the trajectory. Since we meta-trained to adapt $\omega$ in the inner loop, we adapt these parameters at meta-test time as well. To compute the gradients with respect to $\omega$, we need to backpropagate through the sampling operation $z \sim q_{\omega}(z)$, using either likelihood ratio or the reparameterization trick(if possible). The likelihood ratio update is

$\nabla_{\omega} \eta=E_{a_{t} \sim \pi\left(a_{t} \mid s_{t} ; \theta, z\right)}\left[\nabla \sim q_{\omega}(.) \quad\left[\nabla_{\omega} \log q_{\omega}(z) \sum_{t} R\left(s_{t}\right)\right]\right. \quad (5)$

This adaptation scheme has the advantage of quick learning on new tasks because of meta-training, while maintaining good asymptotic performance since we are simply using the policy gradient.

3.4. Analysis of Structured Latent Space

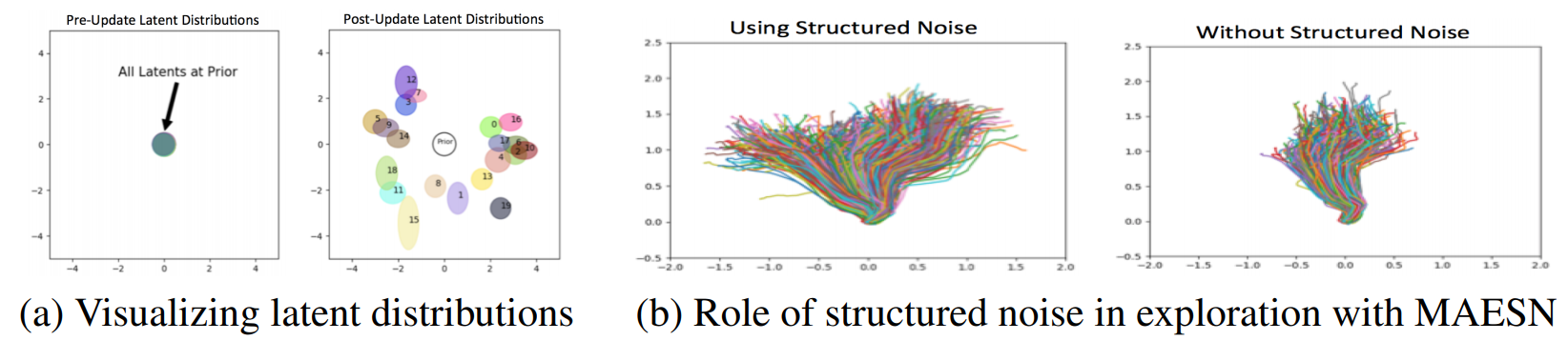

Let us consider a new task $\tau_{i}$ with reward $R_{i}$, and a learned model with policy parameters We investigate the structure of the learned latent space in the manipulation task by visualizing pre-update $\omega_{i}=\left(\mu_{i}, \sigma_{i}\right)$ and post-update $\omega_{i}^{\prime}=\left(\mu_{i}^{\prime}, \sigma_{i}^{\prime}\right)$ parameters for a 2D latent space. The variational distributions are plotted as ellipses. As can be seen from Fig 5a, pre-update parameters are all driven to the prior $\mathcal{N}(0, I)$, while the post-update parameters move to different locations in the latent space to adapt to their respective tasks. This indicates that the meta-training process effectively utilizes the latent variables, but also minimizes the KL-divergence against the prior, ensuring that initializing $\omega$ to the prior for a new task will produce effective exploration.

We also evaluate whether the noise injected from the latent space learned by MAESN is actually used for exploration. We observe the exploratory behavior displayed by a policy trained with MAESN when the latent variable z is kept fixed, as compared to when it is sampled from the learned latent distribution. We can see from Fig. 5b that, although there is some random exploration even without latent space sampling, the range of trajectories is much broader when z is sampled from the prior.